21 coaches online • Server time: 09:18

* * * Did you know? The most aggressive player is Taku the Second with 6628 blocks.

| Recent Forum Topics |

2012-11-18 18:19:19

58 votes, rating 6

58 votes, rating 6

IT infrastructure

10 years ago, when FUMBBL was started, the site ran on a single computer. This was a cheap standard desktop machine and didn't have any special hardware at all.

With the popularity of the site growing and the hardware wasn't up to par with the load, I upgraded the server to a more powerful machine, taking away the older low-powered one. The new machine was still desktop class (ie, no server-grade CPUs or memory, and this is still the case today), but much more powerful. As time progressed and the site got more and more popular and I added more and more features, this machine ended up not having enough power.

So, I purchased a second machine for the site and installed the database on it. This was a big upgrade in terms of hardware resources and I was at the time working hard on optimizing the code to run better (making sure DB queries had relevant indexes and things like that). The site continued to grow and I upgraded hardware as necessary to cope with the load. Although instead of completely getting rid of some of the old machines, they were instead delegated to take care of less resource intensive tasks.

In the end, we built up to the 4 server setup we use today. Web server, Database server, Game/IRC server and a firewall. Due to the way this build up organically, I never put any great thought into how the servers were networked. Until a couple of weeks ago, all servers were simply set up in a single network (LAN) for the servers and one separate LAN for the desktop machines I have in my home. There was simply a small home switch in the rack cabin where the servers reside, and each server had a single cable connected to that switch.

Now, a couple of weeks ago, I felt it was time to get rid of the small home-grade switch in the rack cabin (mostly because it was loose in the rack and it was starting to annoy me). So I went on a hunt for a relatively cheap rack-mounted switch. The switch I found (and bought) ended up being more enterprise grade than I expected to get for a reasonable price (the switch is the Netgear GS724T, a so called "smart switch").

One major feature of the switch is what's called VLAN support. In simple terms, this feature allows the switch to be split up into multiple parts and effectively make it function as if there are multiple, separated, switches instead of a single one.

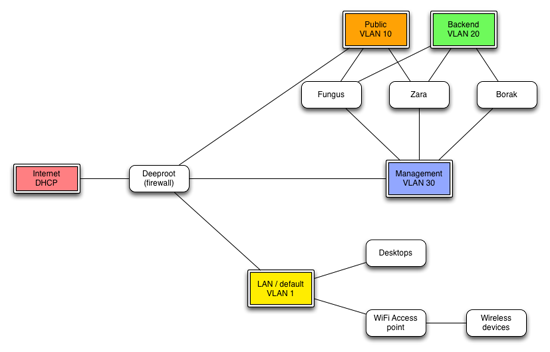

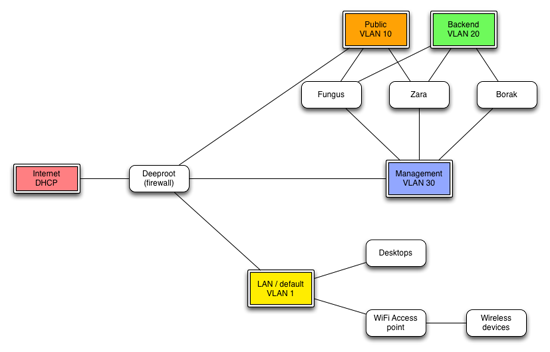

With this feature available to me, I decided to take a step back and build a more enterprise-grade network for the servers. So I sat down with my graphing tool and planned what I wanted to do. This is what I came up with:

As you can see there are four networks in the chart in the network. The Public network is meant for web and game server traffic. The Backend network is meant for database traffic. The Management network is meant for management traffic (for example backups and my own administration). Finally, the LAN network is there to allow me to separate my own machines from the server networks.

To actually implement this, I needed to buy a few extra network cards and a bunch of network cable (for those of you who care, the server is strictly shielded (FTP) CAT.6 cable). Funny fact: The cables cost almost as much as the network cards these days...

Here's where I ended up on a tangent that cost me 14 hours of work... I bought the network cards I needed, and started to install them (one of the downtimes over this weekend)... only to notice that one of the servers needed a PCI-express card instead of the PCI cards I had. So, off to the store again to pick up a new card. So that done, I installed the network card (downtime #2).. only to find that it wasn't functioning properly. Le sigh... This was supposed to be the card for the cable between Deeproot and the Public network.

Ok, so I started to think "How do I get past this?", and came up with a plan involving combining the LAN and Public networks over a single cable (fancy feature normally called Trunking in network speak). Netgear's smart switch, however, does this a bit differently and I would need to do something called "VLAN tagging" on the traffic bound for at least one of these logical networks. Ok, so far so good. I had a plan. Unfortunately, there's a saying which goes like this: "No plan survives contact with the enemy". It turns out that the OS that was installed on Deeproot was old and the system was in a pretty weird state (functional, but not properly updated over the years and effectively I couldn't update things), meaning I could not enable the VLAN tagging feature. So I think "Ok then. I'll set up a virtual machine for the firewall and all will be well". But nope. The virtualization system was also not set up properly, and couldn't be installed.

And here comes downtime #3, a longer one. What I ended up doing was to reinstall the whole system (now running Ubuntu Server 12.04 LTS) to get it into a working state. An hour or so later, the machine was a freshly installed system and I spent another hours configuring the most basic things (network cards, firewall rules and mail server mainly). Funnily enough, the network card that was not working properly under the old OS works fine now with the new Linux kernel. This allowed me to skip the whole VLAN tagging thing entirely and go back to the original plan.

At this point, there was no real change from before. All traffic was still running on the same network (the management one). Now was the time to start moving the traffic over to these newly created networks.

So I start with the easiest traffic, the traffic from the internet (you guys) to the website. I went over to the firewall and changed the forwarding for the web traffic to go over the Public network. Easy, right? Wrong. Everything stopped, no-one could access the site from the outside.

So off I go on tangent #2. It turns out that Debian, which is what Ubuntu is based on, and also which is what is installed on fungus (the web server machine), changed the default behaviour of the firewall software I use (Shorewall) to drop packets that are considered "martians" (the name being the reason I'm writing this part :) ). In this context, a martian is a network packet coming in on a network card that originates from an IP which is not associated with that network card (yeah, it takes a while to wrap your head around that one). So, I loosened up the rules on that a bit, and things started to work properly again.

Back on track, the next thing I moved was the traffic between the web server and the database. This move was relatively pain-free and I want to think that the site became quicker by doing this (it feels quicker to me, but I don't have any actual benchmarks for this).

This is where things are now. There are a few more things I need to move (for example, game server database traffic hasn't been moved yet and currently goes over the management network). Also, I am contemplating limiting management traffic a bit further and setting up a VPN to get access to it in order to improve security.

In the end, this is all a big learning experience for me and something I wouldn't be likely to research in the details I have if I didn't have FUMBBL. At times, it's extremely frustrating to deal with but I truly am happy when things are working as I want them to and that makes all this stuff worth the effort to me. After this weekend, FUMBBL has a network that is pretty much enterprise-grade and that makes me happier. In theory, it should be faster as well, despite me always having had a full gigabit network.

This turned out to be a long blog entry.. Hope you enjoyed it :)

With the popularity of the site growing and the hardware wasn't up to par with the load, I upgraded the server to a more powerful machine, taking away the older low-powered one. The new machine was still desktop class (ie, no server-grade CPUs or memory, and this is still the case today), but much more powerful. As time progressed and the site got more and more popular and I added more and more features, this machine ended up not having enough power.

So, I purchased a second machine for the site and installed the database on it. This was a big upgrade in terms of hardware resources and I was at the time working hard on optimizing the code to run better (making sure DB queries had relevant indexes and things like that). The site continued to grow and I upgraded hardware as necessary to cope with the load. Although instead of completely getting rid of some of the old machines, they were instead delegated to take care of less resource intensive tasks.

In the end, we built up to the 4 server setup we use today. Web server, Database server, Game/IRC server and a firewall. Due to the way this build up organically, I never put any great thought into how the servers were networked. Until a couple of weeks ago, all servers were simply set up in a single network (LAN) for the servers and one separate LAN for the desktop machines I have in my home. There was simply a small home switch in the rack cabin where the servers reside, and each server had a single cable connected to that switch.

Now, a couple of weeks ago, I felt it was time to get rid of the small home-grade switch in the rack cabin (mostly because it was loose in the rack and it was starting to annoy me). So I went on a hunt for a relatively cheap rack-mounted switch. The switch I found (and bought) ended up being more enterprise grade than I expected to get for a reasonable price (the switch is the Netgear GS724T, a so called "smart switch").

One major feature of the switch is what's called VLAN support. In simple terms, this feature allows the switch to be split up into multiple parts and effectively make it function as if there are multiple, separated, switches instead of a single one.

With this feature available to me, I decided to take a step back and build a more enterprise-grade network for the servers. So I sat down with my graphing tool and planned what I wanted to do. This is what I came up with:

As you can see there are four networks in the chart in the network. The Public network is meant for web and game server traffic. The Backend network is meant for database traffic. The Management network is meant for management traffic (for example backups and my own administration). Finally, the LAN network is there to allow me to separate my own machines from the server networks.

To actually implement this, I needed to buy a few extra network cards and a bunch of network cable (for those of you who care, the server is strictly shielded (FTP) CAT.6 cable). Funny fact: The cables cost almost as much as the network cards these days...

Here's where I ended up on a tangent that cost me 14 hours of work... I bought the network cards I needed, and started to install them (one of the downtimes over this weekend)... only to notice that one of the servers needed a PCI-express card instead of the PCI cards I had. So, off to the store again to pick up a new card. So that done, I installed the network card (downtime #2).. only to find that it wasn't functioning properly. Le sigh... This was supposed to be the card for the cable between Deeproot and the Public network.

Ok, so I started to think "How do I get past this?", and came up with a plan involving combining the LAN and Public networks over a single cable (fancy feature normally called Trunking in network speak). Netgear's smart switch, however, does this a bit differently and I would need to do something called "VLAN tagging" on the traffic bound for at least one of these logical networks. Ok, so far so good. I had a plan. Unfortunately, there's a saying which goes like this: "No plan survives contact with the enemy". It turns out that the OS that was installed on Deeproot was old and the system was in a pretty weird state (functional, but not properly updated over the years and effectively I couldn't update things), meaning I could not enable the VLAN tagging feature. So I think "Ok then. I'll set up a virtual machine for the firewall and all will be well". But nope. The virtualization system was also not set up properly, and couldn't be installed.

And here comes downtime #3, a longer one. What I ended up doing was to reinstall the whole system (now running Ubuntu Server 12.04 LTS) to get it into a working state. An hour or so later, the machine was a freshly installed system and I spent another hours configuring the most basic things (network cards, firewall rules and mail server mainly). Funnily enough, the network card that was not working properly under the old OS works fine now with the new Linux kernel. This allowed me to skip the whole VLAN tagging thing entirely and go back to the original plan.

At this point, there was no real change from before. All traffic was still running on the same network (the management one). Now was the time to start moving the traffic over to these newly created networks.

So I start with the easiest traffic, the traffic from the internet (you guys) to the website. I went over to the firewall and changed the forwarding for the web traffic to go over the Public network. Easy, right? Wrong. Everything stopped, no-one could access the site from the outside.

So off I go on tangent #2. It turns out that Debian, which is what Ubuntu is based on, and also which is what is installed on fungus (the web server machine), changed the default behaviour of the firewall software I use (Shorewall) to drop packets that are considered "martians" (the name being the reason I'm writing this part :) ). In this context, a martian is a network packet coming in on a network card that originates from an IP which is not associated with that network card (yeah, it takes a while to wrap your head around that one). So, I loosened up the rules on that a bit, and things started to work properly again.

Back on track, the next thing I moved was the traffic between the web server and the database. This move was relatively pain-free and I want to think that the site became quicker by doing this (it feels quicker to me, but I don't have any actual benchmarks for this).

This is where things are now. There are a few more things I need to move (for example, game server database traffic hasn't been moved yet and currently goes over the management network). Also, I am contemplating limiting management traffic a bit further and setting up a VPN to get access to it in order to improve security.

In the end, this is all a big learning experience for me and something I wouldn't be likely to research in the details I have if I didn't have FUMBBL. At times, it's extremely frustrating to deal with but I truly am happy when things are working as I want them to and that makes all this stuff worth the effort to me. After this weekend, FUMBBL has a network that is pretty much enterprise-grade and that makes me happier. In theory, it should be faster as well, despite me always having had a full gigabit network.

This turned out to be a long blog entry.. Hope you enjoyed it :)

Comments

Posted by PigStar-69 on 2012-11-18 18:25:13

none of this made any sense to me but sir i certainly appreciate the work you have put in.

where would we be without you ;-)

where would we be without you ;-)

Posted by andr_e on 2012-11-18 18:25:43

It's simply too much for me :P

Well done :D

Well done :D

Posted by Jasfmpgh on 2012-11-18 18:28:56

Thanks for all the time and effort you put in Big C.

Posted by Endzone on 2012-11-18 18:32:10

I was with you all the way upto "10 years ago".

Great work - thanks!

Great work - thanks!

Posted by MattDakka on 2012-11-18 18:39:59

Thanks for all your hard work and all the time spent for us!

Posted by Overhamsteren on 2012-11-18 18:40:16

You sir are a gentleman and a wizard

Posted by ClayInfinity on 2012-11-18 19:00:17

Rated 6 for the picture! Cool graphics Christer! And those Cyanide guys think they have graphics!!

Posted by Ehlers on 2012-11-18 21:32:22

One StarPlayer to rule them all, one StarPlayer to find them, One Starplayer to bring them all and in the darkness bind them

Awesome blog, awesome Deeproot rule them all.

Did not understand any of it, but nice with a storytelling before nighttime.

Awesome blog, awesome Deeproot rule them all.

Did not understand any of it, but nice with a storytelling before nighttime.

Posted by SavageJ on 2012-11-18 23:19:37

I'm no network expert, but I can follow this. It looks like a good design to me. Smart switches are cool. :-)

Posted by Qaz on 2012-11-18 23:23:25

Borak is the gaming server (if memory serves me right) And he has dirty player what does that tell you!

Great Work Christer the things you wrap your brain around.

Great Work Christer the things you wrap your brain around.

Posted by Garion on 2012-11-18 23:43:56

Great stuff christer, also love the new improvement you made to the current games page.

Posted by Kelkka on 2012-11-19 00:12:56

You could easily put up your own company, manage the IT side and program stuff to make the profit. Absolutely impressive how one man can do it all, very nice :)

Posted by the_Sage on 2012-11-19 00:37:00

Rated 6 for Deeproot

Posted by Jeffro on 2012-11-19 01:01:59

Rated 6. There's a team theme in here somewhere, and as soon as I sober up...

Posted by Dhaktokh on 2012-11-19 11:08:38

Wizard!!

Posted by Badoek on 2012-11-19 15:58:50

all this and no plead for donations? amateur!

still rated 6 though :D

still rated 6 though :D